I'm playing with one of these at the moment:

http://www.ebay.co.uk/itm/281251283409

The default mode is as a UART. I was able to change the baud rate and the number of bits per character using the termios interface (as I was doing with the UARTs on the EXT connector earlier).

Libftdi compiled easily in CodeBlocks, though without the EEPROM part (for which it was missing some dependency, I forget what). Running the programs as root is awkward because it loses LD_LIBRARY_PATH: "sudo chmod o+w -R /dev/bus/usb" let them run as regular user afterwards, for the current connection. I heard the proper way is to make a udev rule, but I don't really understand that yet.

The bitbang mode seemed interesting. The FT232R datasheet says it has a 128 byte FIFO buffer for storing bytes sent from USB to the UART; and in the asynchronous bitbang mode, "data packets can be sent to the device and they will be sequentially sent to the interface at a rate controlled by an internal timer".

This article was useful:

http://hackaday.com/2009/09/22/introduction-to-ftdi-bitbang-mode/

But apparently the bitbang mode is not very good:

The really odd thing is that the average transfer rate was as expected for each of the baud rates I tried. The ftdi_write_data function blocks when the buffer is full; and when trying to send 100000 bytes for a test, it took the expected amount of time to return (for example, 20 seconds to execute with 100000 bytes at "300 baud"). Also, in UART mode, the output doesn't normally have gaps between the bytes.

I would have thought I must be doing something silly here, but it sounds like the author of the Hackaday article has the same problem.

http://www.ebay.co.uk/itm/281251283409

The default mode is as a UART. I was able to change the baud rate and the number of bits per character using the termios interface (as I was doing with the UARTs on the EXT connector earlier).

Libftdi compiled easily in CodeBlocks, though without the EEPROM part (for which it was missing some dependency, I forget what). Running the programs as root is awkward because it loses LD_LIBRARY_PATH: "sudo chmod o+w -R /dev/bus/usb" let them run as regular user afterwards, for the current connection. I heard the proper way is to make a udev rule, but I don't really understand that yet.

The bitbang mode seemed interesting. The FT232R datasheet says it has a 128 byte FIFO buffer for storing bytes sent from USB to the UART; and in the asynchronous bitbang mode, "data packets can be sent to the device and they will be sequentially sent to the interface at a rate controlled by an internal timer".

This article was useful:

http://hackaday.com/2009/09/22/introduction-to-ftdi-bitbang-mode/

But apparently the bitbang mode is not very good:

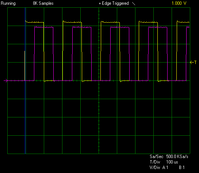

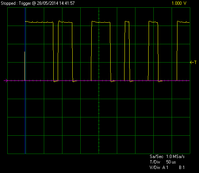

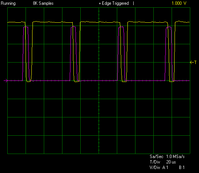

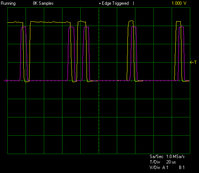

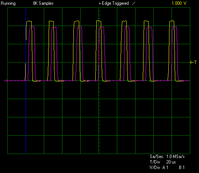

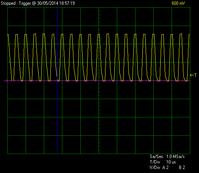

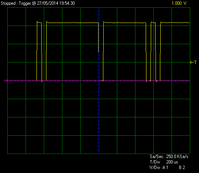

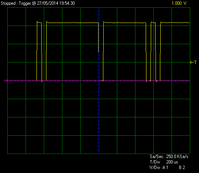

The actual behaviour is rather odd. According to the datasheet, the clock in bitbang mode is 16 times the specified baud rate. And the 8 bits in each byte are sent to 8 different pins at the same time, as parallel output. If I set the baud rate to 300, I expect it to be really 4800, so 200us per tick. However, the scope trace shows bursts of 50us bits with delays in between.Pulse width modulation makes for a nice visual demonstration of speed but unfortunately can’t really be put to serious use. In addition to the previously-mentioned I/O latency, other devices may be sharing the USB bus, and the sum total is that we can’t count on this technique to behave deterministically nor in realtime. PWM with an LED looks just fine to the eye…the timing is close enough…but trying to PWM-drive a servo is out of the question. For a synchronous serial protocol such as SPI, where a clock signal accompanies each data bit, this method works perfectly, and hopefully that can be demonstrated in a follow-up article.

The really odd thing is that the average transfer rate was as expected for each of the baud rates I tried. The ftdi_write_data function blocks when the buffer is full; and when trying to send 100000 bytes for a test, it took the expected amount of time to return (for example, 20 seconds to execute with 100000 bytes at "300 baud"). Also, in UART mode, the output doesn't normally have gaps between the bytes.

I would have thought I must be doing something silly here, but it sounds like the author of the Hackaday article has the same problem.

Code:

#include <stdio.h>

#include <ftdi.h>

#define LED1 0x08 /* CTS (brown wire on FTDI cable) */

#define LED2 0x01 /* TX (orange) */

#define LED3 0x02 /* RX (yellow) */

#define LED4 0x14 /* RTS (green on FTDI) + DTR (on SparkFun breakout) */

#define BUFLEN 100000

int main() {

int i,n;

struct ftdi_context ftdic;

unsigned char data[BUFLEN];

for (i = 0; i < BUFLEN; i ++) {

data[i] = 0;

if (i % 2 == 0) {

data[i] = LED1;

}

}

ftdi_init(&ftdic);

if(ftdi_usb_open(&ftdic, 0x0403, 0x6001) < 0) {

printf("unable to open device: %s\n", ftdi_get_error_string(&ftdic));

return 1;

}

if (ftdi_set_bitmode(&ftdic, LED1 | LED2 | LED3 | LED4, BITMODE_BITBANG)) {

printf("unable to set bit mode: %s\n", ftdi_get_error_string(&ftdic));

return 1;

}

if (ftdi_set_baudrate(&ftdic, 300)) {

printf("unable to set baud rate: %s\n", ftdi_get_error_string(&ftdic));

return 1;

}

for(;;) {

puts(".");

ftdi_write_data(&ftdic, data, BUFLEN);

}

}

Last edited: