MadDog

Member

Hi, a few years ago I played about with gp2x and had a lot of fun but then sold it all when work got heavy and had no time. Now for the last year or so I've been working with OMap chips and other ImgTech based SOC's and I just thought i'll pop by and give a warning about the discard function in a pixel shader.

In the docs they do say it's slow but there is a rather nasty gotcha in there. Because GLES 2.0 shaders can not share constants as you can in DX you tend to write uber shaders. For the most part this is fine but the discard function (mainly used for 1bit stencil type ops) is slow. This is because the hardware does the Z rejection before the pixel shader is ran and so if discard is called the chip has to do a 'loop back' to correct the z buffer, this can half the performance. Now here is the gotcha! The shader compiler when it sees discard being used flags the shader and this loop back is turned on for all pixels even if discard is not used (normally via a uniform being set to false).

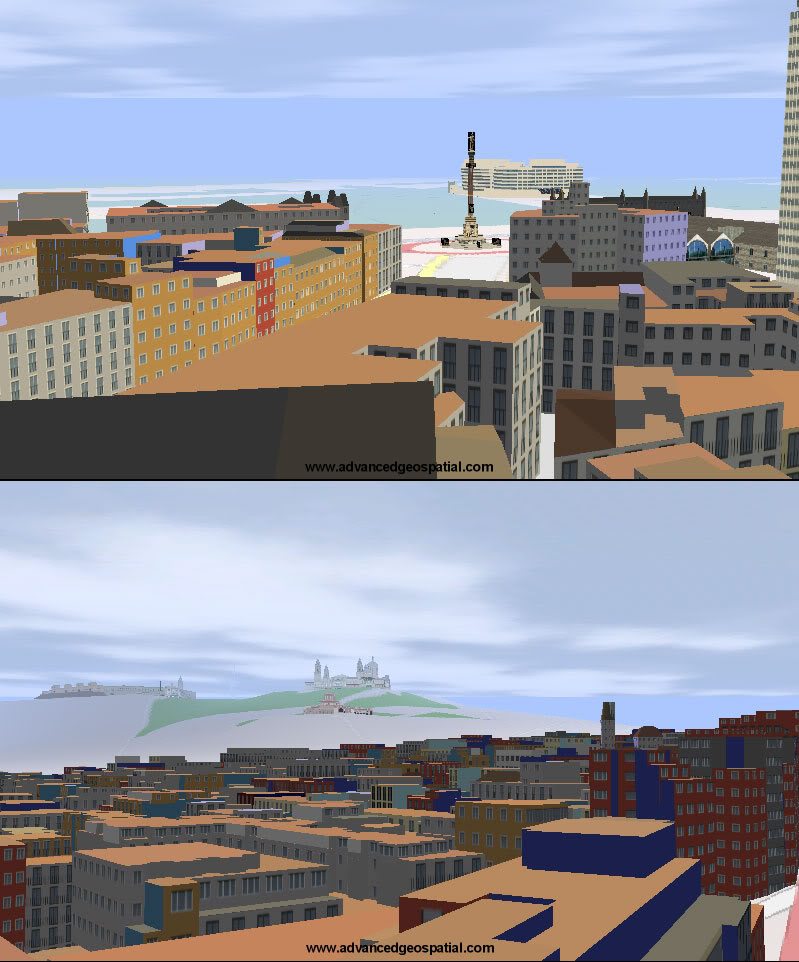

I found this out with the software I'm working on, its 3D satnav (buildings, landmarks, land scape, looks like Google earth in your hand). I was using 1bit alpha and so discard so that the landmarks can have complicated outlines without needing 1000's of polygons. When I added the code the app halved it's frame rate! After TI looking into this it turned out because I 'may' use the discard in the shader the loop back was on 100% of the time. I'm currently trying to get a gl extension added so that this loop back can be turned off by the app when the uniform used to toggle 1bit alpha is set to false.

Currently the best way round the problem is to separate the shaders out so that discard is only in the shader if it's needed for every pixel. It is an odd situation to be in as in the 20 years I've been writing 3D games / apps 1bit alpha has always been the fastest type of alpha available. Some of the first 3D cards only had 1bit (stencil) alpha!

Anyway, as soon as this little baby is out I'm going to get one, the omap3 chip is a peach of a chip. From what I've been doing, think Xbox1 or GC type power but with better shaders! It's very fast, I love it.

In the docs they do say it's slow but there is a rather nasty gotcha in there. Because GLES 2.0 shaders can not share constants as you can in DX you tend to write uber shaders. For the most part this is fine but the discard function (mainly used for 1bit stencil type ops) is slow. This is because the hardware does the Z rejection before the pixel shader is ran and so if discard is called the chip has to do a 'loop back' to correct the z buffer, this can half the performance. Now here is the gotcha! The shader compiler when it sees discard being used flags the shader and this loop back is turned on for all pixels even if discard is not used (normally via a uniform being set to false).

I found this out with the software I'm working on, its 3D satnav (buildings, landmarks, land scape, looks like Google earth in your hand). I was using 1bit alpha and so discard so that the landmarks can have complicated outlines without needing 1000's of polygons. When I added the code the app halved it's frame rate! After TI looking into this it turned out because I 'may' use the discard in the shader the loop back was on 100% of the time. I'm currently trying to get a gl extension added so that this loop back can be turned off by the app when the uniform used to toggle 1bit alpha is set to false.

Currently the best way round the problem is to separate the shaders out so that discard is only in the shader if it's needed for every pixel. It is an odd situation to be in as in the 20 years I've been writing 3D games / apps 1bit alpha has always been the fastest type of alpha available. Some of the first 3D cards only had 1bit (stencil) alpha!

Anyway, as soon as this little baby is out I'm going to get one, the omap3 chip is a peach of a chip. From what I've been doing, think Xbox1 or GC type power but with better shaders! It's very fast, I love it.

Last edited by a moderator: