You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

how oldschool graphics work - save RAM - color tricks

- Thread starter FBnil

- Start date

Godmil

Active Member

What an awesome video, thanks for the headsup

TeDaDeS

Forum Addict!

Good stuff. I only made text based games on C64, nice to see some nice color tricks.

fusion_power

Advanced Member

I wish they could transfer such honorable old Virtues into modern games, then Titles like recent CoD or Battlefront etc. would not eat that much ressources (Black Ops 3 can eat even 6GB VRam without any trouble...for what?!?! )

erico

Advanced Member

The apple II and trs80 was exactly why I checked the video, I love those bizarre color techs.

Should I have free time I sure would like to write a system to convert b/w to that set of colors to use in modern game.

I did extract the colors and color patterns from the coco so at least I can mimick it somehow.

Should I have free time I sure would like to write a system to convert b/w to that set of colors to use in modern game.

I did extract the colors and color patterns from the coco so at least I can mimick it somehow.

levi

Still fresh, damnit!

The Apple-II colour tricks don't seem that tricksy to me. It's just like writing 2-bit graphics data (black and white) to a colour mode or vice versa.

One thing that I could never quite get my brain around was with the BBC micro at least, graphics bytes were arranged horizontally, such that the LSB of a byte appeared right of the MSB. But the next byte was arranged directly below the first one, up until it was 8 bytes deep. Then the next byte was rendered directly to the right of the first byte. I guess it was done like that so it was quicker to write out aligned characters, such as text rendered to the screen - all the data for a single character is contiguous that way, whereas if it was a more straightforward striped arrangement (as most bitmaps are) then each line of the character would need to be output 39 bytes later (in a monochrome, 40 character wide mode at least). But it didn't half fry my brain when trying to render graphics primitives to the screen in machine code.

One thing that I could never quite get my brain around was with the BBC micro at least, graphics bytes were arranged horizontally, such that the LSB of a byte appeared right of the MSB. But the next byte was arranged directly below the first one, up until it was 8 bytes deep. Then the next byte was rendered directly to the right of the first byte. I guess it was done like that so it was quicker to write out aligned characters, such as text rendered to the screen - all the data for a single character is contiguous that way, whereas if it was a more straightforward striped arrangement (as most bitmaps are) then each line of the character would need to be output 39 bytes later (in a monochrome, 40 character wide mode at least). But it didn't half fry my brain when trying to render graphics primitives to the screen in machine code.

I wish they could transfer such honorable old Virtues into modern games, then Titles like recent CoD or Battlefront etc. would not eat that much ressources (Black Ops 3 can eat even 6GB VRam without any trouble...for what?!?! )

I suppose rendering stuff, but yeah, I'm pretty sure that the next CoD would sell well, if it had a 1bpp graphics output (less than 256kB VRAM in HD!) ...

Moreover, I can't see, how you could apply those "old virtues" to the graphics pipeline.

Hồng Thất Công

Đả Cẩu Bổng Pháp

I tip my hat to those old-school graphic artists.

levi

Still fresh, damnit!

That palette switching trick always impressed me back in the day, although in practice I only ever saw it done every few character lines, not per byte as demonstrated in those videos. By my maths, a character line gets printed to a square-pixel (40 character wide) PAL display 400k times a second. The 6502 CPU, for example tended to run at 2MHz, meaning there were only 5 CPU ticks per screen byte, and when writing out a register takes at least 3 ticks, and keeping a counter capable of counting high enough takes 4 ticks, it's not really practical to change palettes on each character line on those old machine.

But a few games did switch pallettes a few times per scan, which gave you some pretty high-res graphics that looked colourful. And BBC Micro Elite even changed screen mode between the vector display at top and the console at the bottom - the vector display being done in square pixel monochrome mode, while the console was in 4 colour double-wide pixel mode - meaning the video memory was only the 10k you'd need for full screen mid-res two colour, while giving you a colourful multicolour console, leaving more RAM free for the complex game code.

What was also important about using low RAM modes was it gave you more time to run code - those ~5 ticks per pixel were halved if you tried to run a multicolour mid-res mode, because the video chip paused the CPU while it accessed the video RAM. Many games ran in 4 colour double wide pixel (low res) modes throughout, but Elite had a resolution boost over those.

But a few games did switch pallettes a few times per scan, which gave you some pretty high-res graphics that looked colourful. And BBC Micro Elite even changed screen mode between the vector display at top and the console at the bottom - the vector display being done in square pixel monochrome mode, while the console was in 4 colour double-wide pixel mode - meaning the video memory was only the 10k you'd need for full screen mid-res two colour, while giving you a colourful multicolour console, leaving more RAM free for the complex game code.

What was also important about using low RAM modes was it gave you more time to run code - those ~5 ticks per pixel were halved if you tried to run a multicolour mid-res mode, because the video chip paused the CPU while it accessed the video RAM. Many games ran in 4 colour double wide pixel (low res) modes throughout, but Elite had a resolution boost over those.

fusion_power

Advanced Member

I suppose rendering stuff, but yeah, I'm pretty sure that the next CoD would sell well, if it had a 1bpp graphics output (less than 256kB VRAM in HD!) ...

Moreover, I can't see, how you could apply those "old virtues" to the graphics pipeline.

I meant it like more ressource saving, modern technics, not "1bpp graphics". :rolleyes: For example, DX12/ VULKAN are a good step in the right direction, if done right. And repeating textures are not bad to save VRAM, modern games tend to use Stuff like unique "Mega Textures" that eats up alot of ressources. You don't even need uötra-high-res textures it you simply add "detail texture" layers up on close range. Unreal did this back then in 1998 and it looked amazing. I don't know any modern game that uses detail textures anymore, recent UT project maybe but not tested it yet.

levi

Still fresh, damnit!

Ah you mean mip-mapping. I'm not sure how much VRAM you can save using that though, as the highest detail mipmap is still going to be huge, unless you want repetitive textures that look bad from a distance. I think mipmapping still happens by the way, just they've got cleverer in hiding the joins when you move from one level to another, so you don't see it any more.

fusion_power

Advanced Member

Propper Mip-Mapping with propper Anisotrophic filtering works well but this is not the only trick. Imagine an additional Texture Layer over the normal textures when you come very close to an wall, surface or whatever. So you see even more structures and details without the need of a general high res Texture. This is what the Unreal Engine does and one of the reasons I sometimes just stood in front of a Wall When playing Unreal 1 back then. XD

Today, this is often done with Normal Mapping, Bump Mapping, Shaders, etc.

http://udn.epicgames.com/Three/TerrainAdvancedTextures.html

https://docs.unrealengine.com/latest/INT/Engine/Rendering/Materials/HowTo/DetailTexturing/index.html

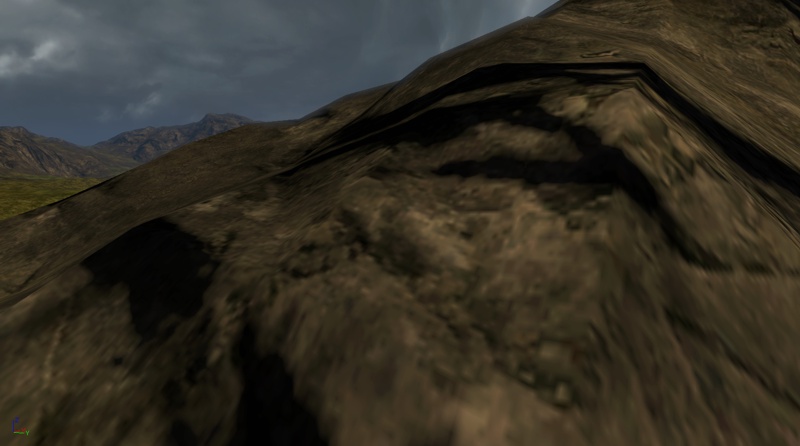

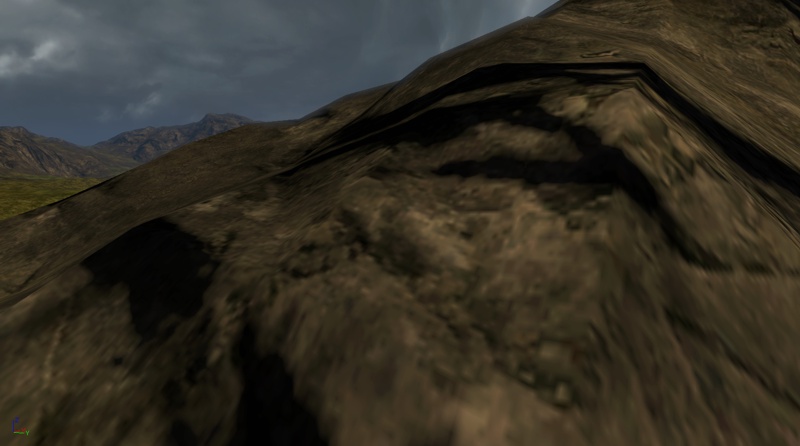

Simple Textures without Detail textures:

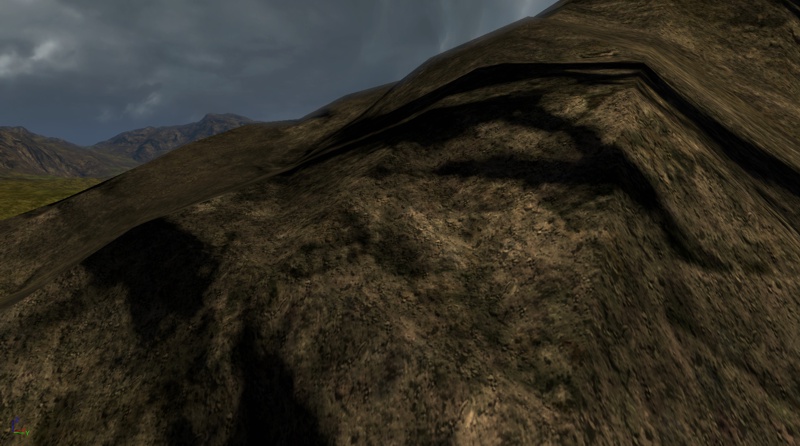

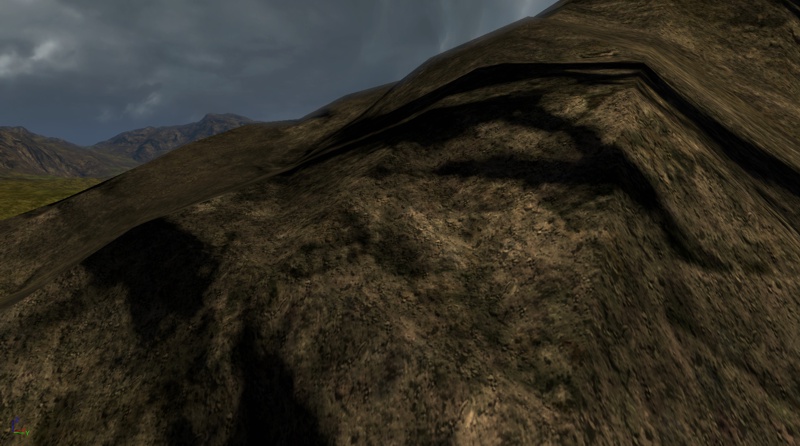

Additional Detail Texture layer on top up on close range:

Today, this is often done with Normal Mapping, Bump Mapping, Shaders, etc.

http://udn.epicgames.com/Three/TerrainAdvancedTextures.html

https://docs.unrealengine.com/latest/INT/Engine/Rendering/Materials/HowTo/DetailTexturing/index.html

Simple Textures without Detail textures:

Additional Detail Texture layer on top up on close range:

Last edited by a moderator:

Nice videos.

It reminded me of an electronics engineering project I made which aimed to reproduce what a video chip does using a CPLD with 128 macrocells (ie. 128 bits of memory). I programmed the CPLD to:

The microcontroller received the image data via serial RS-232, writing it to the video RAM chips as it was received. Writing a full image took like 2~3 seconds. Yes, the microcontroller was that slow. ^_^ But once the image was on video RAM the image was displayed perfectly on the NTSC TV. The image was even visible as it was being written, with a little bit of distortion only.

There's probably (definitely) easier ways to achieve the same, though. :lol:

It reminded me of an electronics engineering project I made which aimed to reproduce what a video chip does using a CPLD with 128 macrocells (ie. 128 bits of memory). I programmed the CPLD to:

- Generate all the pulse timings necessary to build a NTSC video signal. There was a counter for vertical lines and one that ran for each horizontal line.

- Read video data from two 8Kbit RAM chips, interleaving reads between the chips to achieve the required speed (max read speed of single RAM chip wasn't enough).

- Generate 16 level grayscale pixels via PWM (one pulse = one pixel) using data read from RAM.

- Connect to the bus of a 16-bit microcontroller to allow writing data to the video RAM chips.

The microcontroller received the image data via serial RS-232, writing it to the video RAM chips as it was received. Writing a full image took like 2~3 seconds. Yes, the microcontroller was that slow. ^_^ But once the image was on video RAM the image was displayed perfectly on the NTSC TV. The image was even visible as it was being written, with a little bit of distortion only.

There's probably (definitely) easier ways to achieve the same, though. :lol:

Similar threads

- Replies

- 25

- Views

- 4K

- Replies

- 29

- Views

- 11K

- Replies

- 8

- Views

- 2K